Executive Summary

In this post, we examine lateral movement techniques, showcasing some that we have observed in the wild within cloud environments. Lateral movement can be achieved by leveraging both cloud APIs and access to compute instances, with access at the cloud level potentially extending to the latter.

We explore cloud lateral movement techniques in all three major cloud providers: Amazon Web Services (AWS), Google Cloud Platform (GCP) and Microsoft Azure, highlighting their differences compared to similar techniques in on-premises environments.

Attackers often use different lateral movement techniques to gain access to sensitive data within an organization's network. Lateral movement techniques can certainly be part of attackers’ approach to on-premises environments, and many of the scenarios we discuss could have similarities to situations on-premises. Here, we focus on lateral movement techniques as we specifically observe them used in cloud environments, with the aim of helping defenders better prepare to improve their cloud security posture.

In cloud environments, attackers operate at two levels: the host or instance, and the cloud infrastructure. This approach allows them to seamlessly combine traditional lateral movement techniques with cloud-specific methods.

Cloud providers offer measures like network segmentation and granular IAM management to limit lateral movement, along with centralized logging to detect this movement. However, cloud APIs do still provide additional mechanisms that attackers could abuse, and misconfigurations can open up further opportunities for malicious behavior.

This article will show how both agent and agentless solutions come together to protect against lateral movement, with each contributing unique strengths. This can help defenders understand why combining both solutions ensures comprehensive coverage in the cloud.

Cortex XDR and Prisma Cloud provide protection from the lateral movement techniques discussed here. If you think you might have been impacted or have an urgent matter, get in touch with the Unit 42 Incident Response team.

| Related Unit 42 Topics | Cloud Cybersecurity Research |

Introduction

Exploring lateral movement in the cloud versus on-premises reveals a marked difference. Each environment has its challenges for defenders. In cloud environments, overly permissive access policies and misconfigured resources can expand opportunities for threat actors to abuse cloud features.

These opportunities introduce a different set of lateral movement techniques. When combined with powerful API access within the cloud, this could potentially result in gaining access to compute instances running within the environment.

In cloud services, APIs play a pivotal role in communication, as well as in facilitating lateral movement. When attackers are equipped with the right cloud permissions, which are typically write access, they can directly interact with cloud services by executing API calls. In such cases, the barrier between access within the cloud and access to compute instances may not be particularly strong, which enables attackers to create or modify cloud resources, effectively streamlining lateral movement.

The scalability of cloud environments – allowing on-demand resource provisioning – simplifies this process, as attackers can effortlessly create new compute instances to expand their operations and take control over existing ones.

Cloud Lateral Movement Techniques

Technique 1: Snapshot Creation

AWS: Elastic Block Store (EBS)

In one case, an attacker gained access to a cloud environment and attempted to pivot between Amazon Elastic Compute Cloud (EC2) instances. The attacker first attempted to access an EC2 instance using traditional lateral movement techniques, such as exploiting default open ports and abusing existing SSH keys.

When these methods proved unsuccessful, the attacker then shifted to use cloud-specific lateral movement techniques. Because the attacker was equipped with relatively powerful IAM credentials, they were able to take another approach to gain access to data within the instance.

This approach would not give access to the runtime environment present on the target instance (including data in memory and data available in the instance's cloud metadata service, such as IAM credentials). However, it would allow access to the data stored on the disk of the target instance.

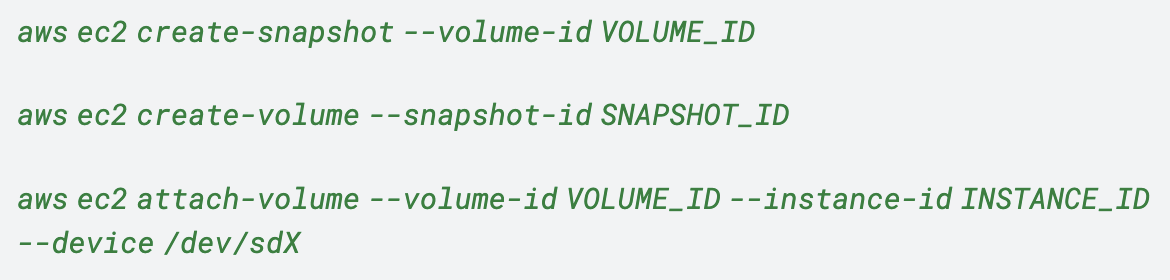

The attacker first created a new EC2 instance with its own set of SSH keys. Then the attacker created an EBS snapshot of their target EC2 instance using the CreateSnapshot API. Then they mounted it to the EC2 instance under their control as shown in Figure 1.

In cloud environments, as a partial substitute for accessing a host that couldn't be accessed interactively, the data stored in the host's virtual block device was accessible. This was achieved using relatively powerful privileges of the compromised IAM credentials and the cloud provider's APIs.

Subsequently, with the EBS snapshot mounted on the attacker’s EC2 instance, the attacker successfully gained access to data stored on the target EC2 instance’s disk. Figure 2 illustrates this chain of events.

This technique is not limited to AWS and it is also relevant to other cloud service providers.

Technique 2: SSH Keys

AWS: EC2 Instance Connect

In a different scenario, an attacker with compromised identity and access management (IAM) credentials used the AuthorizeSecurityGroupIngress API to add an inbound SSH rule to security groups. As a result, instances that were previously protected from internet access by the security group became reachable, including from attacker-controlled IP addresses.

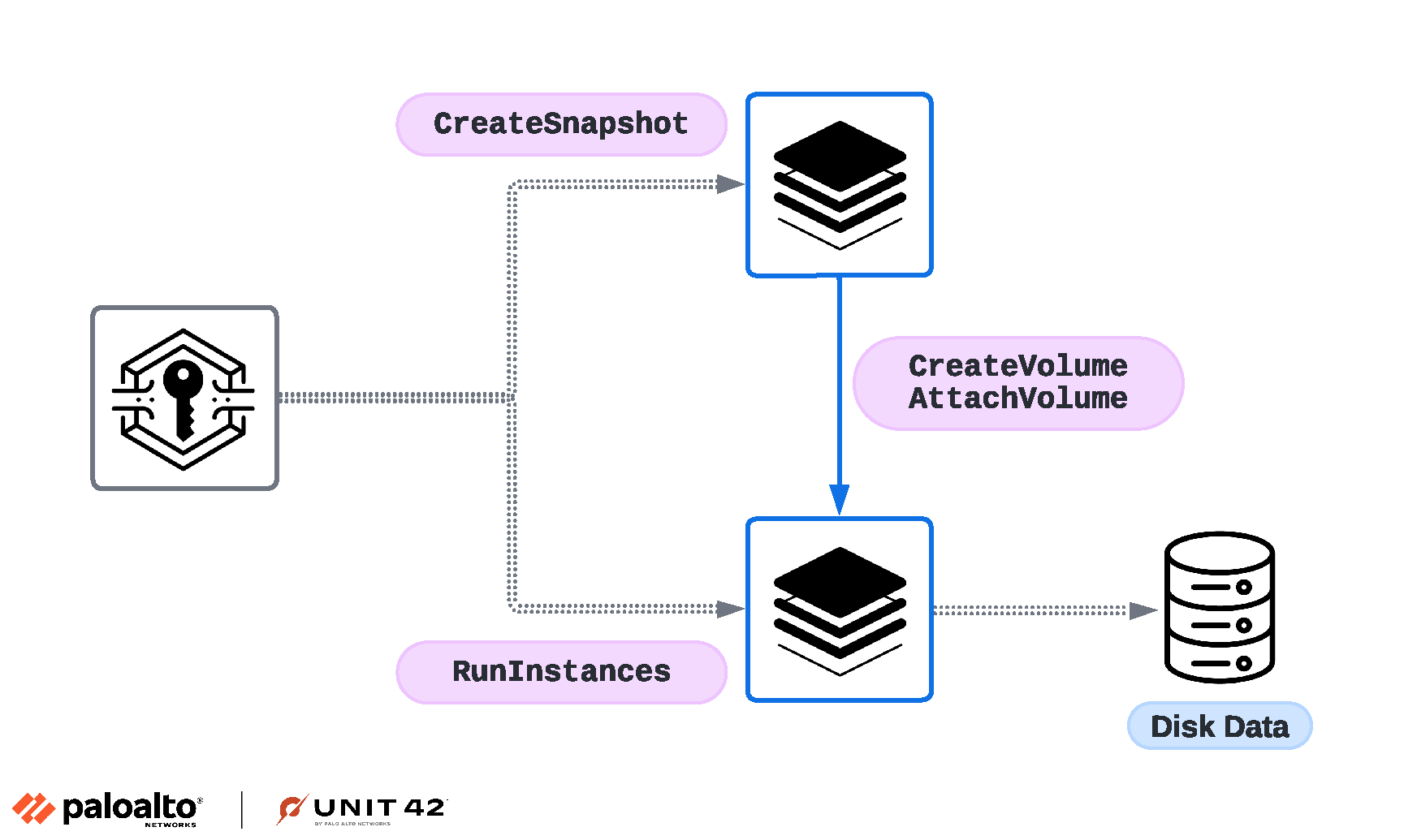

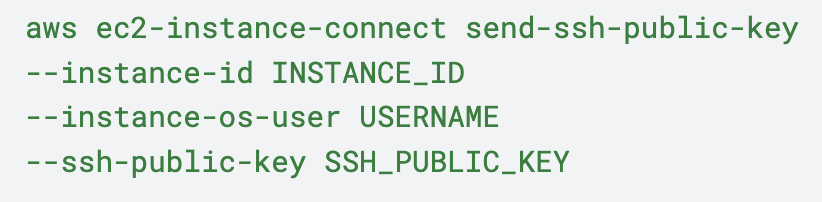

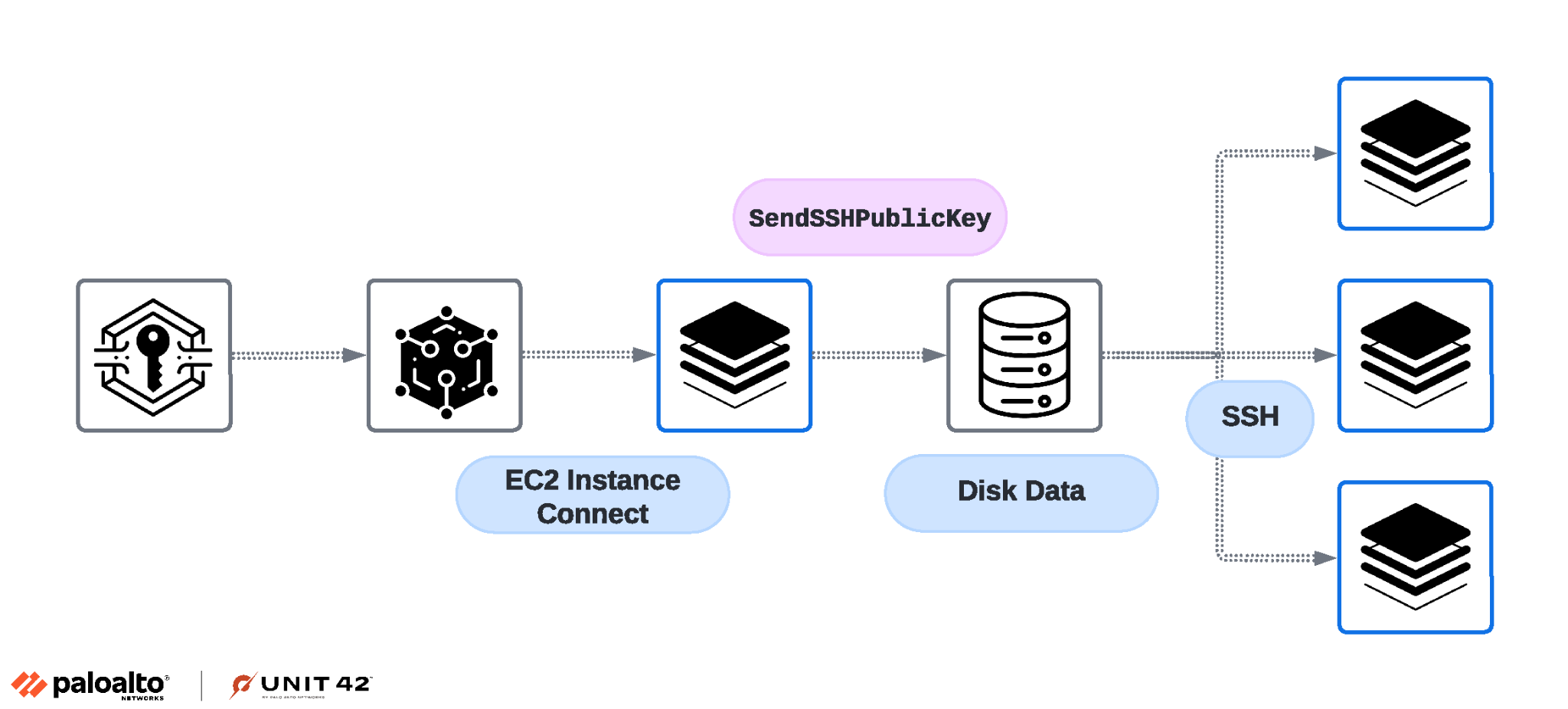

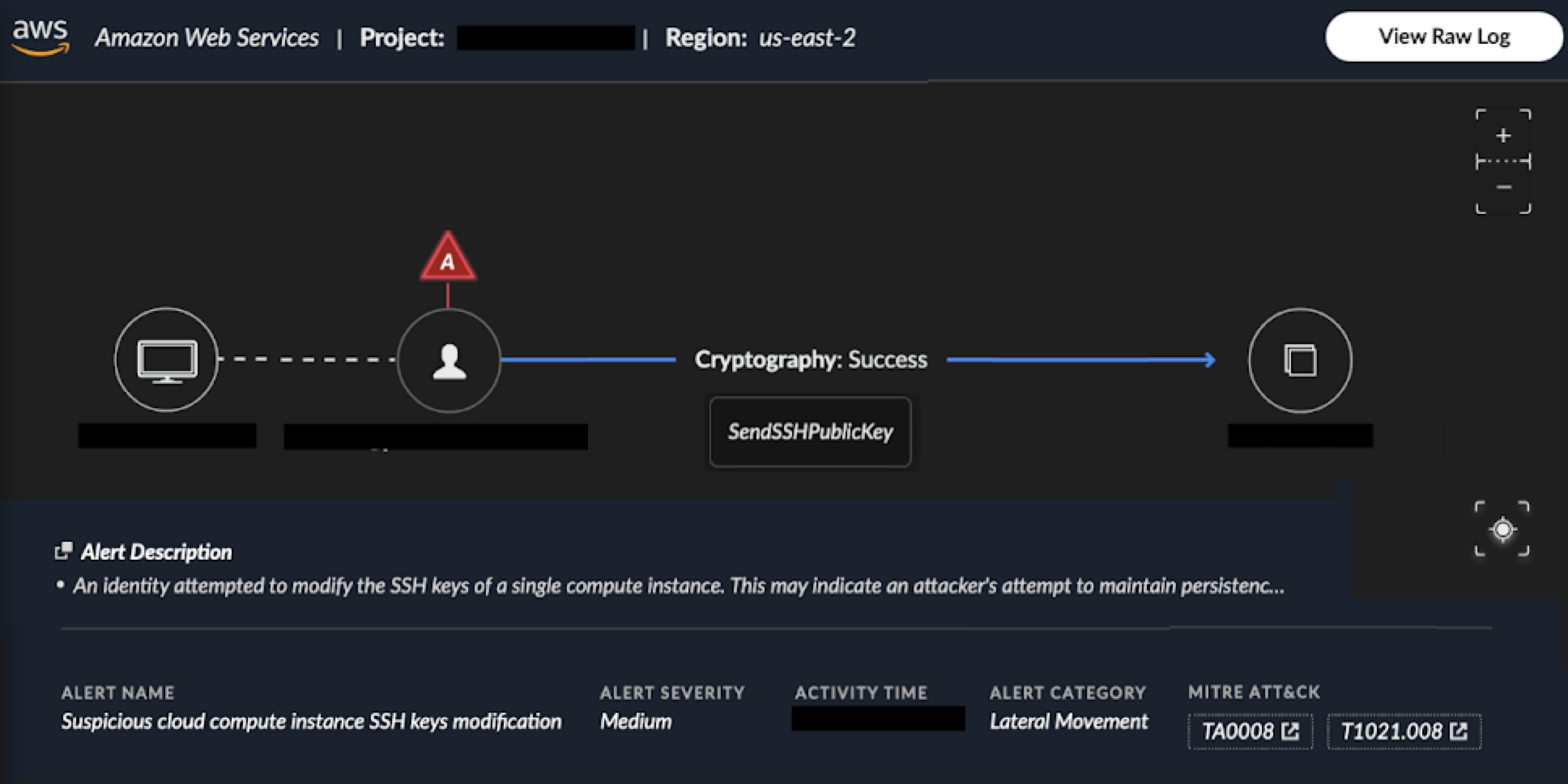

Modifying the security groups rules allowed classic network lateral movement, showcasing the simplicity of configuring network resources in the cloud compared to on-premises environments. Following this, the powerful IAM permissions the attacker gained allowed them to use the EC2 Instance Connect service (which manages SSH keys on a machine). They were able to temporarily push a public SSH key by using the SendSSHPublicKey API as shown in Figure 3.

This allowed the attacker to connect to an EC2 instance, granting them access to the instance’s data. This serves as an example of how cloud providers have their own mechanisms for accessing compute instances alongside the operating system’s native authentication and authorization technologies. However, there are typically connections between the two.

Although there are barriers between the cloud and the compute instances running within it, these barriers are permeable by design, enabling movement between these different authentication and authorization systems. This is a good example of how powerful IAM credentials allow access to compute instances (as well as containers and RDS databases, for example). They can do so even though these non-cloud-native technologies might have their own authentication methods that operate independently, outside the cloud.

Within the EC2 instance, the attacker discovered additional cleartext credentials saved to disk, notably a private SSH key and AWS access tokens. With these access credentials, the attacker pivoted to additional EC2 instances.

Using SSH and cloud tokens for lateral movement demonstrates the combination of both traditional and cloud lateral movement techniques employed by the attacker. Eventually, utilizing these credentials led to the attacker pivoting to other development environments. Figure 4 provides a flow chart for this chain of events.

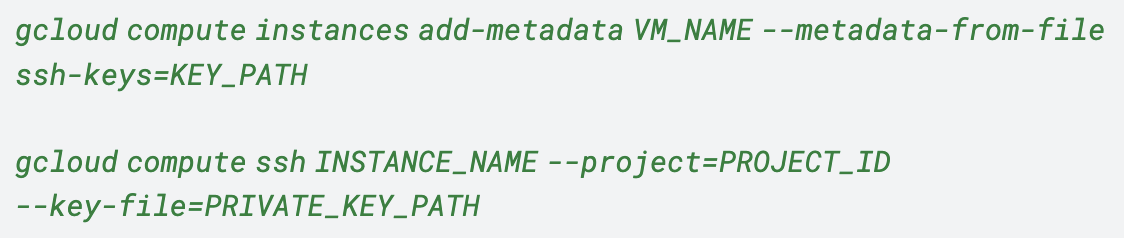

GCP: Metadata-Based SSH Keys

The equivalent lateral movement technique also exists in GCP due to misconfiguration. Compute Engine instances can be configured to store their SSH keys in the instance metadata, as long as the OS Login service is not used.

Stored in the instance metadata, these SSH keys facilitate access to individual instances. But instances can also have their SSH keys stored in the project metadata, which means these keys will grant access to all instances within the project.

This works as long as instances do not restrict project-wide SSH keys. Public SSH keys can be appended to instance metadata by using the Google Cloud CLI as illustrated below in Figure 5.

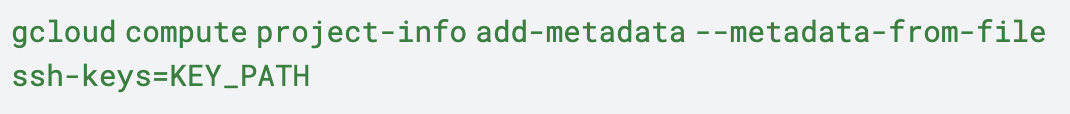

Similarly, public SSH keys can also be added to the project metadata with elevated privileges. This enables attackers with sufficiently powerful cloud credentials to access all instances in that specific project using the command shown below in Figure 6.

Virtual private cloud network security settings can protect against misconfigurations of SSH keys.

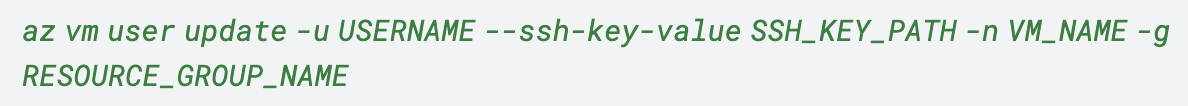

Azure: VMAccess Extension

Another noteworthy lateral movement technique is abusing the VMAccess extension in Azure. The VMAccess extension is used for resetting access to virtual machines (VMs), and one of its functionalities in Linux VMs includes updating the SSH public key for a user.

Attackers with sufficiently powerful cloud credentials can use this extension to gain access to VMs by resetting the SSH key for a specific user in a designated VM. This is done in the Azure CLI with the command shown in Figure 7.

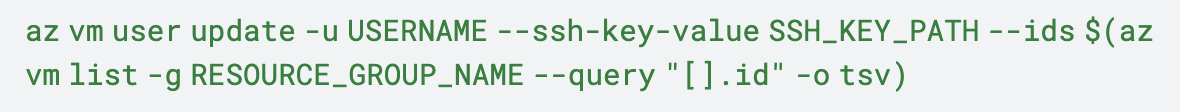

This technique can also be extended to compromise a specific user across multiple VMs in the same resource group, using the command shown in Figure 8.

Technique 3: Serial Console Access

AWS: Serial Console Access

Another technique we've observed involves serial console access. The serial console exists in all three major cloud providers, and it typically offers an interactive shell on an instance. It serves as a troubleshooting tool without any networking capabilities.

In contrast to the EC2 instance connect technique, this approach comes with greater limitations, as it requires preconfiguration of the instance's operating system with a user password or additional functionalities like SysRq.

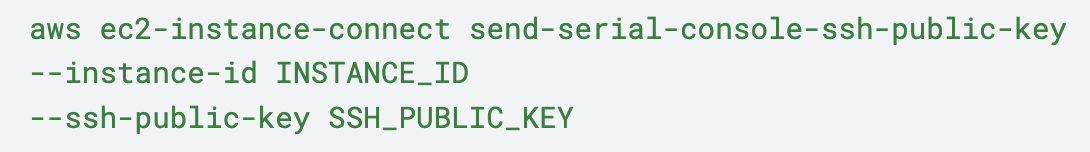

In this context, an attacker chose the serial console as an alternative to SSH, as it can bypass security group rules configured for the instance. In one case, an attacker once again used the EC2 Instance Connect service, using the SendSerialConsoleSSHPublicKey API to temporarily push a public SSH key. See the command shown in Figure 9.

But this time, this action allowed the attacker to establish a serial console connection to the EC2 instances, allowing them to access the file system and execute shell commands within the instance.

GCP: SSH Key Authentication

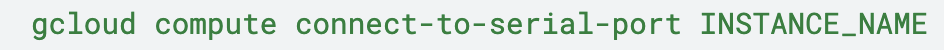

In GCP, the serial console relies on SSH key authentication, requiring a public SSH key added to the project or instance metadata. An attacker with sufficient cloud API privileges could potentially use the Google Cloud CLI to establish a serial console connection to a Compute Engine instance using the following command in Figure 10.

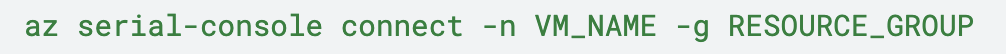

Azure: VMAccess Extension

This technique has some limitations in Azure. An attacker with sufficient cloud API privileges can use the VMAccess extension to either create a new local user with a password or reset the password of an existing local user. Subsequently, the attacker uses a command in the Azure CLI to initiate a serial console connection to the VM, as shown in Figure 11.

Technique 4: Management Services

AWS: Systems Manager

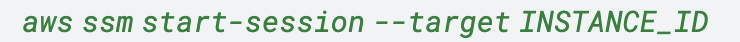

In another use case, an attacker with IAM privileges to the Systems Manager service targeted instances managed by the service. There, the attacker established a connection to multiple managed instances using the StartSession API and initiated an interactive shell session on each instance using the command shown in Figure 12.

Of note, this method does not require an SSH inbound rule in the associated security group of the EC2 instance, effectively bypassing security group rules.

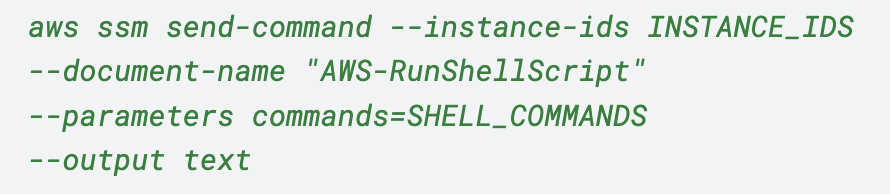

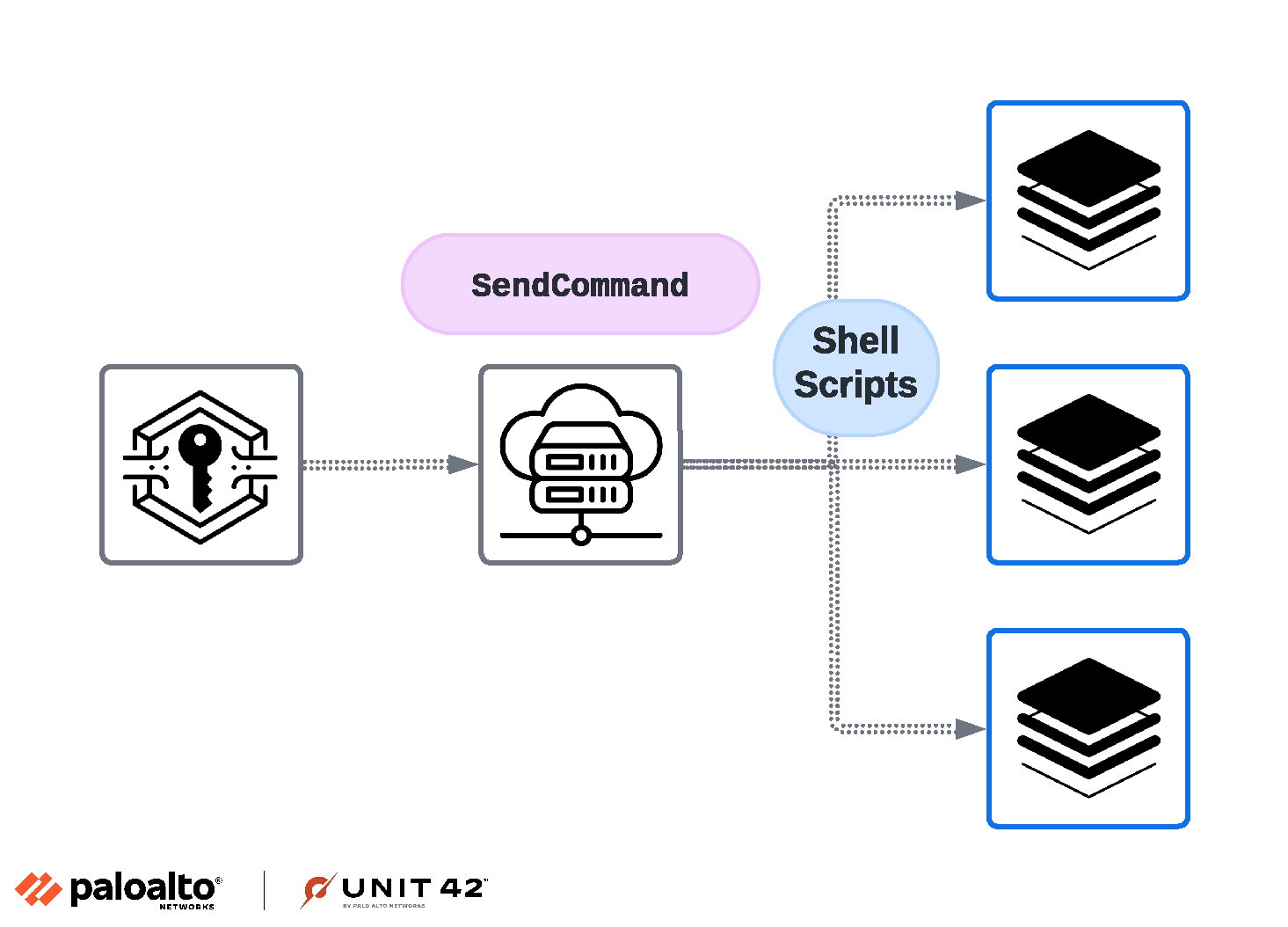

The attacker also used the SendCommand API to execute scripts across a large number of managed instances simultaneously, enabling large-scale information gathering specifically targeting credentials files. The command to do this is shown in Figure 13.

Below, Figure 14 shows a flowchart illustrating the chain of events for this attack.

Agent and Agentless: Better Together

Given the nature of the cloud, we can categorize attackers' techniques into two layers: the host and the cloud. The host layer encompasses all actions executed within cloud instances, while the cloud layer comprises all the API calls made within the cloud environment. In each of the techniques we observed, the attackers moved seamlessly between the cloud and the instances, utilizing both cloud APIs and operations at the host-level.

Regardless of the authentication and authorization technologies typically used for managed compute instances, defenders should assume that these are not strong barriers. Attackers with powerful cloud credentials could still gain access to compute instances within the cloud environment.

Detecting the activities in this article requires correlating data from both agent and agentless solutions that provide a comprehensive view into cloud environments.

Attackers who are able to access hosts often have sufficient privileges to disable local security controls and security agents running on the host. Thus, it is important to be alert to when relevant log streams from hosts stop as a possible indicator of compromise.

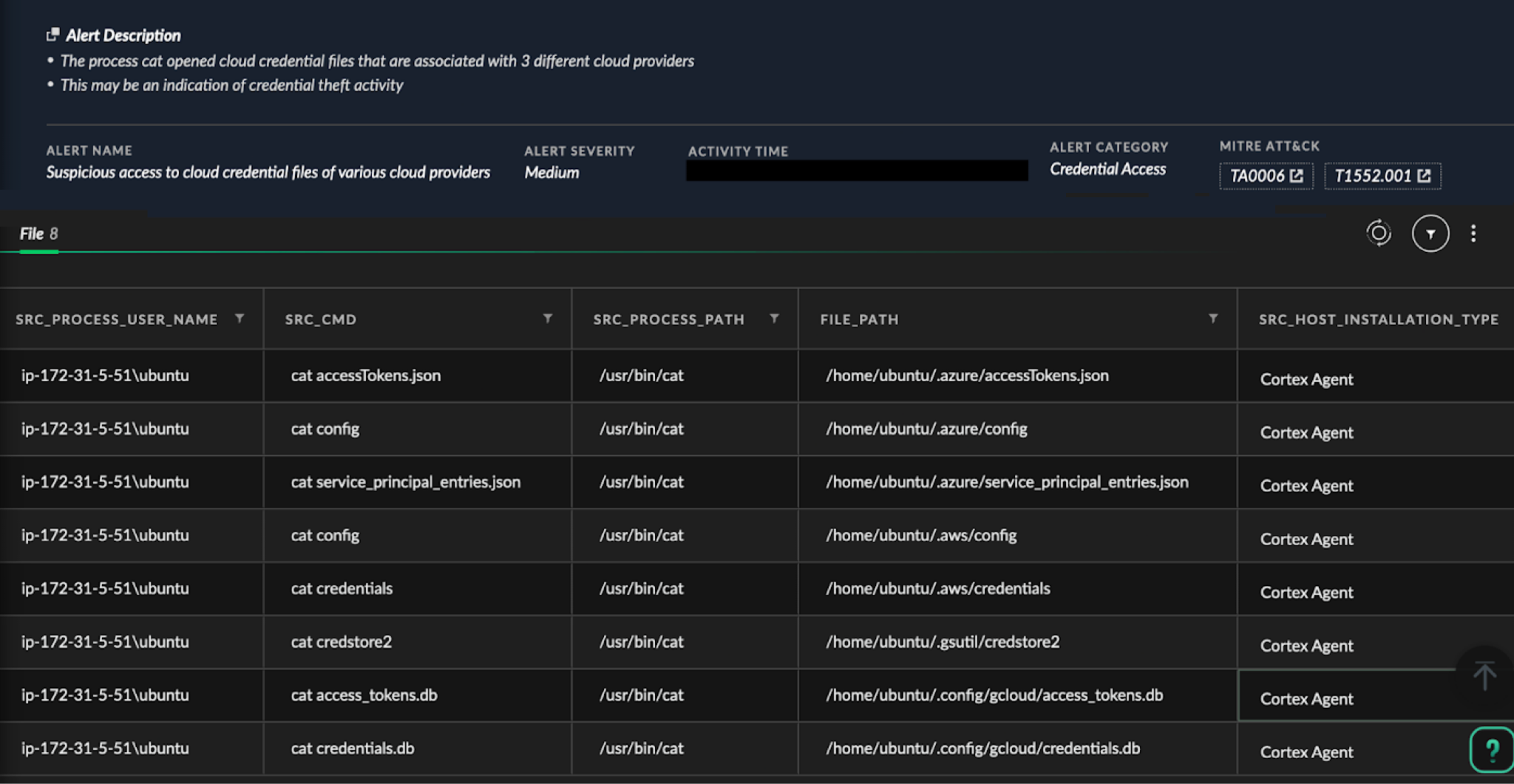

Let's examine lateral movement technique 2, where an attacker abused the EC2 Instance Connect service to access EC2 instances. The agentless solution provides insights into the attacker's access methods by offering visibility into all executed cloud-level API calls, including actions such as security group modification and SSH key injection. An alert for this in an AWS panel is shown below in Figure 15.

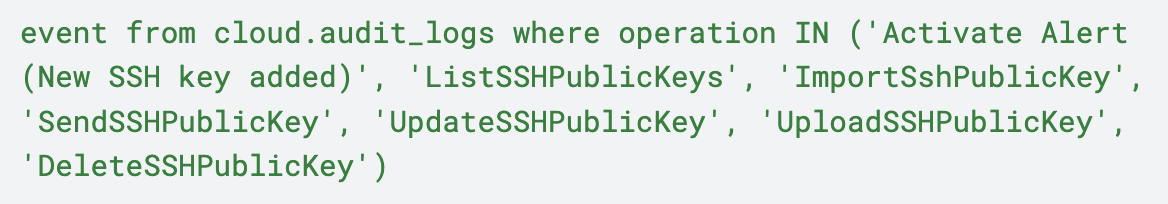

The Prisma Cloud Resource Query Language (RQL) query shown below in Figure 16 could also be used to identify suspicious SSH operations taken by a malicious actor.

Simultaneously, if a Cortex XDR agent is installed on an EC2 instance that offers host-level visibility, it reveals the attacker's efforts to search for credentials and sensitive files. This visibility proved crucial in understanding how the attacker performed lateral movement and pivoted to other environments.

It is more effective to secure cloud environments with both agent and agentless solutions. Agents are great for having full visibility and control over specific instances, with the caveat that agents can often be disabled by attackers.

On the other hand, agentless solutions provide cloud-level visibility. Moreover, properly configured cloud logging environments cannot easily be modified or disabled by attackers, providing a critical backstop for defenders. Combining both solutions provides the best of both worlds in terms of securing cloud environments.

Conclusion

Attackers with sufficient privileges at the cloud API level can take advantage of the characteristics of cloud environments for lateral movement using cloud APIs. Cloud APIs often enable attackers to pivot through compute instances with relative ease compared to traditional lateral movement in typical on-premises environments. However, on-premises environments, especially virtualized environments with the equivalent power available to an attacker with access to the virtualization layer, have plenty of risks of this type as well.

For some of the scenarios described, there may be detections and remediations available from CSPs or other sources. Defenders should be aware of the options available to improve their cloud security posture.

Since misconfigurations and similar issues can often weaken organizations’ cloud security, defenders should pay particular attention to using best practices recommended by your CSP, and also to ensuring that your configuration matches your security needs.

Employing both agent and agentless solutions is an effective way to detect the hybrid tactics of attackers who combine traditional and cloud-based techniques for lateral movement. The cloud lateral movement techniques illustrated in this article highlight the importance of having a comprehensive security approach to effectively address the challenges inherent in cloud environments.

Palo Alto Networks customers receive better protection from these attacks through the following products:

Cortex XDR

Cortex XDR provides SOC teams with a full incident story across the entire digital domain by integrating activity from cloud hosts, cloud traffic and audit logs together with endpoint and network data. Cortex leverages all this data to detect unusual cloud activity that correlates with known TTPs such as cloud computing credential theft, cryptojacking and data exfiltration.

Prisma Cloud

Prisma Cloud provides DevOps and SOC teams the ability to monitor for vulnerabilities, configuration and event operations across organization-wide cloud and hybrid cloud resources and infrastructure. Prisma Cloud Defender agents protect and monitor for vulnerabilities, misconfiguration and suspicious runtime events for:

- Cloud web apps

- VM runtime events

- Serverless functions

- Storage container resources

This allows organizations to maintain situational awareness and improve DevOps practices to maintain a secure cloud environment.

If you think you might have been impacted or have an urgent matter, get in touch with the Unit 42 Incident Response team or call:

- North America Toll-Free: 866.486.4842 (866.4.UNIT42)

- EMEA: +31.20.299.3130

- APAC: +65.6983.8730

- Japan: +81.50.1790.0200

Palo Alto Networks has shared these findings with our fellow Cyber Threat Alliance (CTA) members. CTA members use this intelligence to rapidly deploy protections to their customers and to systematically disrupt malicious cyber actors. Learn more about the Cyber Threat Alliance.

Google Cloud has provided guidance on how to properly configure cloud systems to protect against the lateral movement opportunities discussed in this article.

Get updates from Unit 42

Get updates from Unit 42