Executive Summary

This post presents our research on a methodology we call BOLABuster, which uses large language models (LLMs) to detect broken object level authorization (BOLA) vulnerabilities. By automating BOLA detection at scale, we will show promising results in identifying these vulnerabilities in open-source projects.

BOLA is a widespread and potentially critical vulnerability in modern APIs and web applications. While manually exploiting BOLA vulnerabilities is usually straightforward, automatically identifying new BOLAs is challenging for the following reasons:

- The complexities of application logic

- The diverse range of input parameters

- The stateful nature of modern web applications

For these reasons, traditional methodologies like fuzzing and static analysis are ineffective in detecting BOLAs, making manual detection the standard approach.

To address these challenges, we utilize the reasoning and generative capabilities of LLMs to automate tasks traditionally done manually. These tasks include the following:

- Understanding application logic

- Identifying endpoint dependency relationships

- Generating test cases and interpreting test results

By combining LLMs with heuristics, our method enables fully automated BOLA detection at scale.

Although our research is in its early stages, we have successfully uncovered quite a few BOLA vulnerabilities in both internal and open-source projects. These include the following vulnerabilities:

- Grafana (CVE-2024-1313)

- Harbor (CVE-2024-22278)

- Easy!Appointments (CVE-2023-3285 to CVE-2023-3290 and CVE-2023-38047 to CVE-2023-38055)

As we continue to refine our research, we are also proactively hunting for BOLAs in the wild.

Cortex Xpanse and Cortex XSIAM customers with the ASM module are able to detect all exposed Grafana, Harbor and Easy!Appointments instances as well as known insecure instances via targeted attack surface rules.

If you think you might have been compromised or have an urgent matter, contact the Unit 42 Incident Response team.

| Related Unit 42 Topics | GenAI, LLMs |

The Challenges of Automating BOLA Detection

As explained in a previous article on BOLA vulnerabilities, BOLA occurs when an API application's backend fails to validate whether a user has the right permissions to access, modify or delete an object.

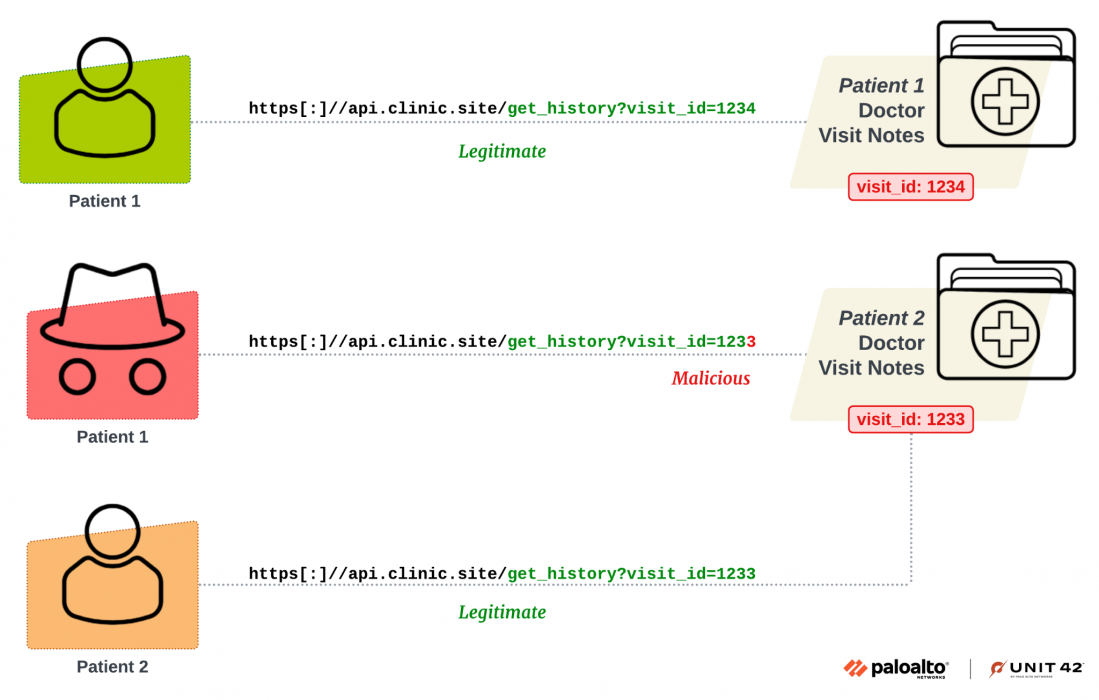

Figure 1 illustrates a simple BOLA example. In this medical application, patients can use the API api.clinic[.]site/get_history?visit_id=XXXX to access the doctor visit note.

Each patient should only have access to their own medical records. However, if the server fails to properly validate this logic, a malicious patient may manipulate the visit_id parameter in the request to access another patient's data. Figure 1 shows this manipulation in the malicious API call.

Although the concept of BOLA is simple, automating its detection presents significant challenges. Unlike other common vulnerabilities such as SQL injection, cross-site scripting (XSS) and buffer overflow, security testing tools like static application security testing (SAST) and dynamic application security testing (DAST) can’t effectively identify BOLAs. These tools rely on known patterns and behaviors of the vulnerabilities, which do not apply to BOLAs. No automated tool currently exists for detecting BOLAs.

Additionally, no development framework exists that can assist developers in preventing BOLAs. As a result, security teams that need to audit for BOLAs must manually review the application and create custom test cases. Several technical challenges contribute to the difficulty of automating BOLA detection:

- Complex authorization mechanisms

Modern API applications often feature complex authorization mechanisms involving multiple roles, resource types and actions. This complexity makes it difficult for auditors to determine which actions a user should be allowed to perform on a specific resource. - Stateful property

Most modern web applications are stateful, meaning each API call can change the application's state and affect the outcomes of other API calls. In other words, the response of calling one API endpoint depends on the execution results of other API endpoints. This complex logic is typically built into the web interfaces that guide end users to properly interact with the applications. However, automatically reverse-engineering the logic from the API specification and tracking the application states is not easy. - Lack of vulnerability indicators

BOLA is a logical error without known patterns that compilers or SAST tools can recognize. At runtime, BOLA doesn't trigger any errors or exhibit specific behavior that reveals the issues. The input and output of a successful exploit typically result in successful requests with status code 200 and they do not contain any suspicious payloads, making it difficult to spot the vulnerabilities. - Context-sensitive inputs

Testing for BOLA involves manipulating input parameters of API endpoints to identify vulnerabilities. This process requires pinpointing parameters that reference sensitive data and supplying the parameters with valid values to run the tests. We rely exclusively on the API specification to understand each endpoint's functionality and parameters, making it challenging to determine if an endpoint may reveal or manipulate sensitive data.After identifying target endpoints and their parameters, the next step is to send requests to these endpoints and observe their behavior. Determining the specific parameter values for testing is difficult because only values mapping to existing objects in the system can trigger BOLAs. Automatically generating such payloads with traditional fuzzing techniques is both challenging and ineffective.

BOLABuster: AI-Assisted BOLA Detection

Given the recent advancements in generative AI (Gen AI) and the challenges of automating BOLA detection, we decided to tackle the problem with AI by developing BOLABuster. The BOLABuster methodology leverages the reasoning capabilities of LLMs to understand an API application and automate BOLA detection tasks that were previously manual and time-consuming.

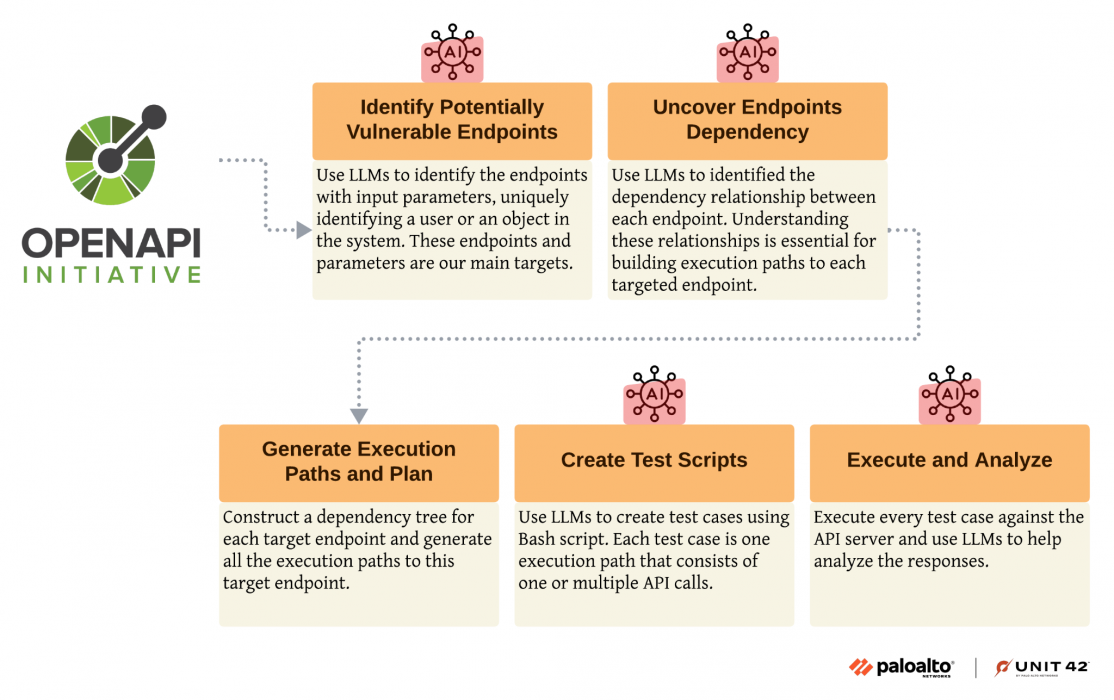

BOLABuster’s algorithm, as illustrated in Figure 2, requires only the API specification for the target API application as input. BOLABuster generates all test cases from the API specification. BOLABuster currently supports OpenAPI Specification 3, the most widely adopted API specification format.

BOLABuster's methodology involves five main stages:

1. Identify Potentially Vulnerable Endpoints (PVEs)

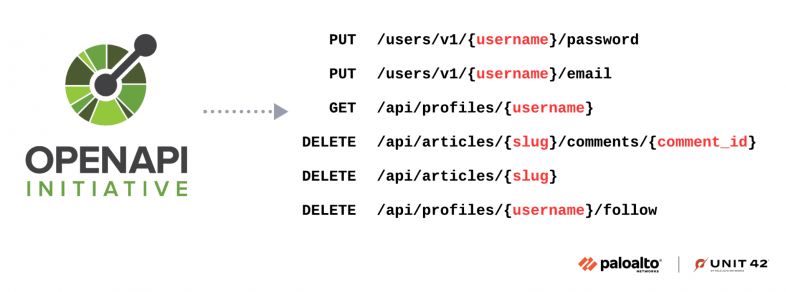

The first stage of our methodology identifies API endpoints that may be susceptible to BOLA. We focus on authenticated endpoints with input parameters that uniquely identify data objects in the system, such as username, email, teamId, invoiceId and visitId. Endpoints with these parameters might be vulnerable to BOLA if the backend fails to validate authorization logic.

AI assists in analyzing each endpoint's functionalities and parameters to determine those that reference to or return sensitive data. Figure 3 illustrates a set of potentially vulnerable endpoints.

2. Uncover Endpoint Dependency

This stage analyzes the application logic to uncover dependency relationships between API endpoints. Due to the stateful nature of modern web applications, understanding the prerequisites of an API endpoint before testing is crucial.

For example, to test the checkout APIs of a shopping cart application, items must first be added to the cart. This action requires knowing the itemId and customerId.

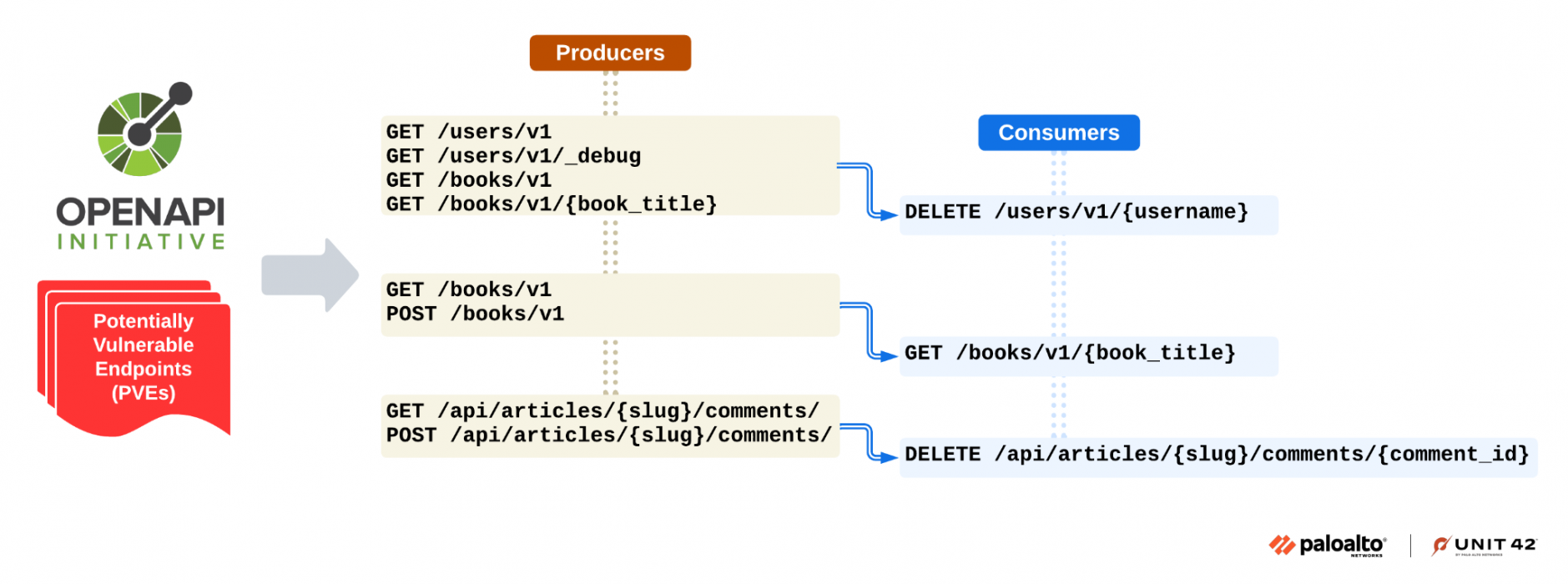

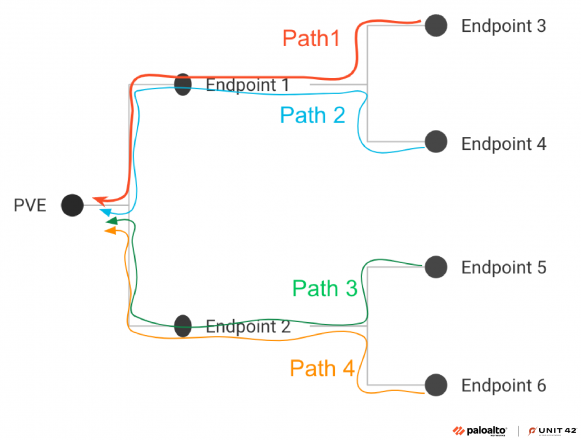

We categorize endpoints that output required parameters for other endpoints as Producers and those that ingest these parameters as Consumers, as shown in Figure 4. Each endpoint can function as both a Producer and a Consumer.

AI assists in analyzing each endpoint's functionalities and parameters to determine if one endpoint can output values required by another endpoint's inputs.

3. Generate Execution Path and Test Plan

Using outputs from the previous two stages, this stage constructs a dependency tree for each PVE. Each node represents an API endpoint, and each edge from a parent node to a child node represents a dependency relationship where the parent is the Consumer, and the child is the Producer.The root of each dependency tree is a PVE, and the path from each leaf node to the root represents an execution path that can reach the PVE. We then create a test plan for each execution path, which consists of all the PVE’s execution paths and their API calls. Figure 5 illustrates an example of a dependency tree with four execution paths.

4. Create Test Scripts

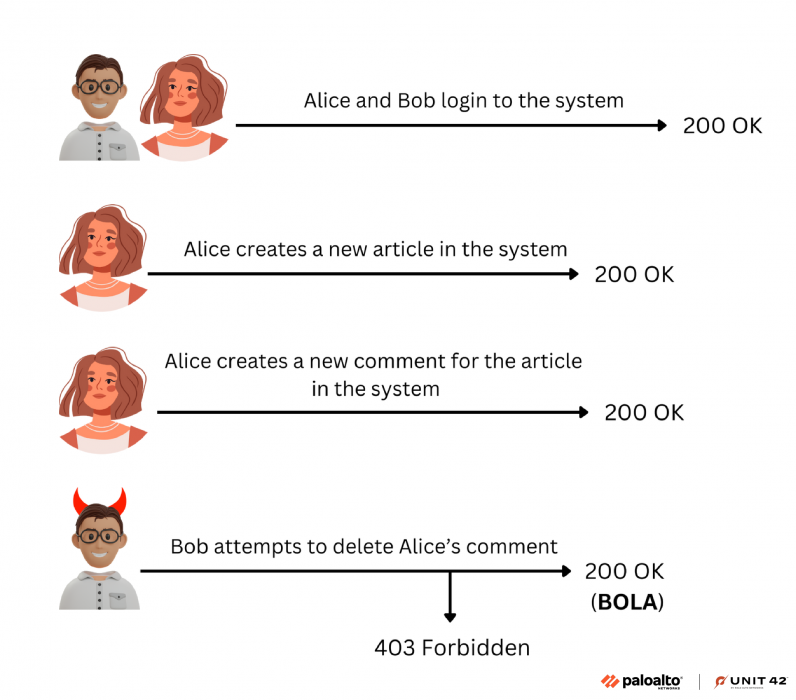

This stage converts each execution path into an executable bash script using LLMs. Each script makes a sequence of API calls to a target server, beginning with logging in to retrieve authentication tokens and ending with a call to the PVE.Each test script involves at least two authenticated users, with one user attempting to access another user's data. If one user can successfully access or manipulate another user's data, it is an indicator of BOLA.Figure 6 illustrates a high-level example of a BOLA test. The test involves two users, Alice and Bob, who log into a system and receive unique authentication tokens.Alice creates an article and a comment on this article within the system. The identifiers for the article and the comment are then passed to Bob. Bob then attempts to perform an unauthorized action—trying to delete Alice's comment. If the action is successful, it indicates a potential BOLA.

5. Execute Plans and Analyze

In this stage, BOLABuster executes the test scripts against the target API server, and then it analyzes the responses to determine if the PVEs are vulnerable to BOLA. We automate user registration, user login and token refresh processes to ensure uninterrupted execution of every test plan.

BOLABuster runs the test cases for the same PVE in a specific order to minimize dependencies between them. For instance, we avoid having a test case delete an object that another test case will need.

Essentially, we ensure that all the API calls within an execution path are successful except for the call to the PVE. The outcome of this call should indicate whether the PVE is vulnerable to BOLA.

The ordering algorithm ensures that applications are populated with the necessary data before it makes any access attempts. BOLABuster schedules the test scripts that include actions such as updating or deleting users or resources at the end of the execution sequence to prevent attempts to fetch deleted or modified resources.

The logs and outputs of each test plan are analyzed by AI. When the AI deems an endpoint vulnerable, humans verify the results to assess the impact of the PVE within the application context.

While we automate as many tasks as possible with AI, human validation remains essential. Our experiments show that human feedback consistently enhances AI's accuracy and reliability.

Hunt for BOLAs in the Wild

Our continuous efforts in testing and scrutinizing open-source projects using BOLABuster have led to the identification and reporting of numerous previously unknown BOLA vulnerabilities, some of which can result in critical privilege escalation. This section provides a brief overview of the vulnerabilities we discovered in three open-source projects: Grafana, Harbor and Easy!Appointments.

Grafana (CVE-2024-1313)

Grafana is a popular data visualization and monitoring tool that allows users to pull data from various sources to observe and understand complex datasets. BOLABuster uncovered CVE-2024-1313, which permits low-privileged users outside an organization to delete a dashboard snapshot belonging to another organization using the snapshot key.

Harbor (CVE-2024-22278)

Harbor is a Cloud Native Computing Foundation (CNCF) graduated container registry that hosts container images and offers features such as role-based access control (RBAC), vulnerability scanning and image signing. BOLABuster identified CVE-2024-22278, which enables a user with a Maintainer role to create, update and delete project metadata. These high-risk actions should be restricted to admins, according to the official documentation.

Easy!Appointments - 15 New Vulnerabilities (CVE-2023-3285 - CVE-2023-3290, CVE-2023-38047 - CVE-2023-38055)

Easy!Appointments is a widely used appointment scheduling and management tool, particularly popular among small businesses. BOLABuster uncovered 15 vulnerable endpoints that allow low-privileged users to bypass authorization controls, leading to potential unauthorized access, data manipulation and full system compromise.

Conclusion

Our research demonstrates the significant potential of AI in revolutionizing vulnerability detection and security research. By leveraging LLMs to automate tasks that were previously manual and time-intensive, we've shown that AI can serve as a reliable assistant. This is true not only for writing code but also for debugging and identifying vulnerabilities.

Although our research is still in its early stages, the implications are profound. The methodology we've developed for BOLA detection can potentially be extended to identify other types of vulnerabilities, opening new avenues for vulnerability research. As AI technology continues to advance, we anticipate that similar approaches will enable a range of security research initiatives that were previously impractical or impossible.

It is also worth noting that this technology can be a double-edged sword. While defenders can use AI to enhance their security measures, adversaries can exploit the same technology to discover zero-day vulnerabilities more quickly and escalate cyberattacks.

The concept of fighting AI with AI has never been more relevant, as we strive to outsmart adversaries with more intelligent and precise AI-driven solutions. It is imperative for the cybersecurity community to remain vigilant and proactive in developing strategies to counteract potential threats posed by AI.

As we use this methodology to identify BOLA vulnerabilities in different products, we responsibly disclose any we find to the appropriate vendors. We also ensure product coverage for vulnerabilities identified, such as those with Grafana, Harbor and Easy!Appointments.

Cortex Xpanse and Cortex XSIAM customers with the ASM module are able to detect all exposed, Grafana, Harbor and Easy!Appointments instances as well as known insecure instances via targeted attack surface rules.

If you think you might have been compromised or have an urgent matter, contact the Unit 42 Incident Response team or call:

- North America Toll-Free: 866.486.4842 (866.4.UNIT42)

- EMEA: +31.20.299.3130

- APAC: +65.6983.8730

- Japan: +81.50.1790.0200

Palo Alto Networks has shared these findings with our fellow Cyber Threat Alliance (CTA) members. CTA members use this intelligence to rapidly deploy protections to their customers and to systematically disrupt malicious cyber actors. Learn more about the Cyber Threat Alliance.

Additional Resources

- Exposing a New BOLA Vulnerability in Grafana

- Users outside an organization can delete a snapshot with its key | Grafana Labs

- Identifying a BOLA Vulnerability in Harbor, a Cloud-Native Container Registry

- NVD - CVE-2024-22278

- AI Tool Identifies BOLA Vulnerabilities in Easy!Appointments

- CVE-2023-3285

- CVE-2023-3290

- CVE-2023-38047

- CVE-2023-38055

Get updates from Unit 42

Get updates from Unit 42