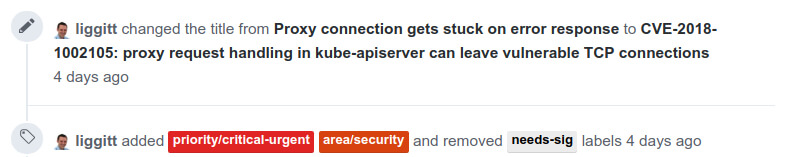

Earlier this week a major vulnerability in Kubernetes was made public by its maintainers. It was originally caught as a bug by Darren Shepherd and was later marked as a critical vulnerability and assigned CVE-2018-1002105. Its implications were clearly laid out in its Github issue page by Kubernetes developer Jordan Liggitt. The bug was fixed and new versions were tagged for all supported Kubernetes releases.

Many technology news sites published articles with warnings, and cloud providers followed with their own updates and mitigations (Google, Azure, AWS). At Twistlock, our CTO John Morello authored an excellent post with all the relevant details and Twistlock platform mitigations.

Since then I’ve seen multiple tools and scripts released and many commercial companies addressed this vulnerability in dedicated updates. The level of visibility this vulnerability attracted was much greater than with what I’ve seen with any similar vulnerability. In all fairness though, it is not common to find critical security bugs in Go code. This provided me with enough motivation to dig in further and produce a working exploit that would prove that the vulnerability really is as critical as suggested.

The vulnerability

Let’s break down the current Github issue description:

With a specially crafted request, users that are authorized to establish a connection through the Kubernetes API server to a backend server can then send arbitrary requests over the same connection directly to that backend, authenticated with the Kubernetes API server’s TLS credentials used to establish the backend connection.

The source of the bug is the Kubernetes API server, aka kube-apiserver. For those unfamiliar with it, the API server provides the REST API endpoint through which Kubernetes operations are made. It is most commonly used through kubectl, the Kubernetes command-line tool. It is accessible by all pods by default.

Access to the API server is secured with strong authentication and authorization mechanisms. Both mechanisms are vital to any Kubernetes cluster configuration, and authentication is also enforced internally between system pods. In essence, Authentication is used for securely identifying and trusting users (or vice versa, for trusting the API server), usually with TLS certificates, while the Authorization strategy determines who can do what. RBAC is commonly used for that purpose.

The bug allows an attacker who can send a legitimate, authorized request to the API server to bypass the authorization logic in any sequenced request. In other words, escalate privileges to that of any user. That’s pretty bad, let’s figure out how that happened.

By looking at the fixing pull request we learn that the faulting code was in the upgradeaware.go file, in the UpgradeAwareHandler Go type. This handler is used for “proxy requests that may require an upgrade”. The proxy handler works with HTTP traffic, and it can handle HTTP Upgrade requests. The API server needs the ability to support requests over WebSockets, so it uses this handler for managing connection.

The handler only serves as a proxy however. It passes all valid requests to an API aggregation layer which routes each request to its appropriate underlying server. This aggregation layer allows adding extensions to the Kubernetes API endpoint. The actual API servers run as servers in system pods and the requests from the aggregation layer are routed to them.

Now to the logical flaw in the handler code. When an HTTP request with HTTP Upgrade headers was sent, given it was an authorized request to the requested object, it would be passed to the aggregation server by the handler. Even if the returned response would not contain HTTP return code 101 (Switching Protocols), the response would be sent to the client and the proxy connection to the internal server would still be established, just as it would have if the connection really was upgraded to use WebSockets.

The problem is that the proxy connection does not enforce the authorization scheme on any of the subsequent requests. It uses its TLS certificate to authenticate against the internal server, and forwards all requests as they come.

An attacker could send an authorized request to the API server with HTTP Upgrade headers and then continue sending any HTTP requests on the same TCP socket, bypassing authorization control.

The fix involved checking the response code from the response of the internal server, and prevent the creation of a proxy connection if no protocol upgrade actually took place.

Possible attack vectors

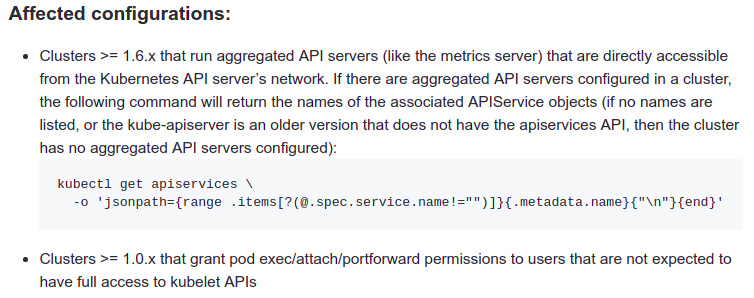

To successfully establish an attack the attacker must be authorized to perform some request to the API server. Unfortunately, the default Kubernetes configuration actually helps the attacker in this scenario.

By default, the RBAC configuration allows any user to perform discovery calls against any aggregated API server. These discovery calls allow any user or pod with access to the API to check for the availability of aggregated API servers. It is also used internally by the node kubelet to perform health checks of the API server.

Anonymous requests are likewise enabled by default, allowing anyone with access to the API endpoint to send valid unauthenticated requests. Specifically, discovery requests can be performed anonymously with no user identification. So with the default configuration, an attacker with access to the API server, from outside or from inside a pod, could use this vulnerability to escalate privileges to any user in the scope of an aggregated API server, without any additional prerequisites.

In order for a request to pass through the vulnerable logic, it needs to either be targeted at an aggregated API server, or be one of exec, attach or portforward actions on a pod object. The latter requires the attacker to be authenticated as a user with authorization to perform one of these actions on a pod. In my exploit I focused on added aggregated API servers, since they can ultimately be attacked through any pod with the discovery calls.

One of the questions I was immediately concerned with is if there are any aggregated API servers installed by default on Kubernetes clusters. Turns out that since Kubernetes 1.8, metrics-server is deployed by default when the kube-up.sh script is used. This Metrics Server API facilitates the kubectl top command in place of heapster.

The metrics server signs up its aggregated API under /apis/metrics.k8s.io/, where it provides metrics information about every node and pod on the cluster. By using the vulnerability, with a discovery call I could escalate privileges and leak information about the whole cluster from any pod on the cluster.

Another interesting extension API server is the Service Catalog, which is installed by default on OpenShift from version 3.7(1) and presumably on Kubernetes clusters deployed by cloud providers.

Exploitation

Now that we have a better understanding of the vulnerability, let’s see an exploit in action. I wrote a super simple Ruby script that exploits the vulnerability against the metrics server, and leaks information on pods from all namespaces in the cluster. It can be executed from any pod and should work assuming metrics-server deployed and default configuration.

In the exploit code, you may notice the second request includes the X-Remote-User header. This header allows impersonating any user on the cluster. X-Remote-Group can also be added if needed.

One tricky part of the exploitation was finding a user that is always configured to have access to the metrics server. There was no such user, but after digging a little in the documentation, I found that by using the format system:serviceaccounts:{namespace}:{username} I could impersonate any Service Account, which can be thought of as internal system users.

I looked at my ClusterRoles configuration and decided to use system:serviceaccount:kube-system:horizontal-pod-autoscaler, because it has a default ClusterRoleBinding that allows it to access the metrics server. To exploit other services, you could probably use any other service account with appropriate binding.

Proof of concept

In the following demo, I ran the PoC code inside a fresh nginx pod, and successfully got back a JSON list including all the pods in my cluster.

Detection

Liggitt did a great job in the issue explaining how to easily determine if you configuration was affected.

In the issue page he also lists potential mitigations for every scenario, but none can really be applied without breaking anything else in the cluster. My recommendation is to update the cluster to one of the fixed Kubernetes releases.

To Twistlock customers, I would also like to add that besides identifying the CVE in the vulnerability monitor, and having a compliance check to disable anonymous authorization (both mentioned in John’s post), the Twistlock platform should also detect traffic between pods that shouldn’t talk to each other. So in case a pod access the API server and is not expected to, Twistlock would alert on this event in the radar.

Ending note

I hope this post cleared some of the fog around this vulnerability. Feel free to reach me out with any questions you may have through email or at @TwistlockLabs. Happy holidays!

- Discussed in more depth in the RedHat OpenShift blog on the vulnerability

Get updates from Unit 42

Get updates from Unit 42